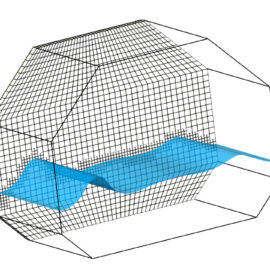

In Autumn 2023 – Spring 2024, CFD Direct is running its OpenFOAM Training courses — Essential CFD, Applied CFD and Programming CFD — fully updated with the latest features of the new version 11 release of OpenFOAM. The training uses new features in OpenFOAM v11 for more productive and effective CFD, including: modular solvers; the

snappyHexMeshConfig utility to simplify meshing; the foamToC utility to search OpenFOAM; updated post-processing functionality; and, enhancements to dictionary input syntax. Essential and Applied CFD courses are available: 18-22 Sep, 2-6 Oct, 29 Jan -2 Feb (2024), 4-8 Mar. Programming CFD is available 23-25 Oct, 14-16 Nov, 12-14 Feb, 18-20 Mar.

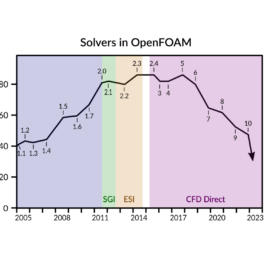

OpenFOAM v11 Training